type

status

date

slug

summary

tags

category

icon

password

AI summary

现象

在1024这个程序猿感受美好的日子,竟然来了一条告警

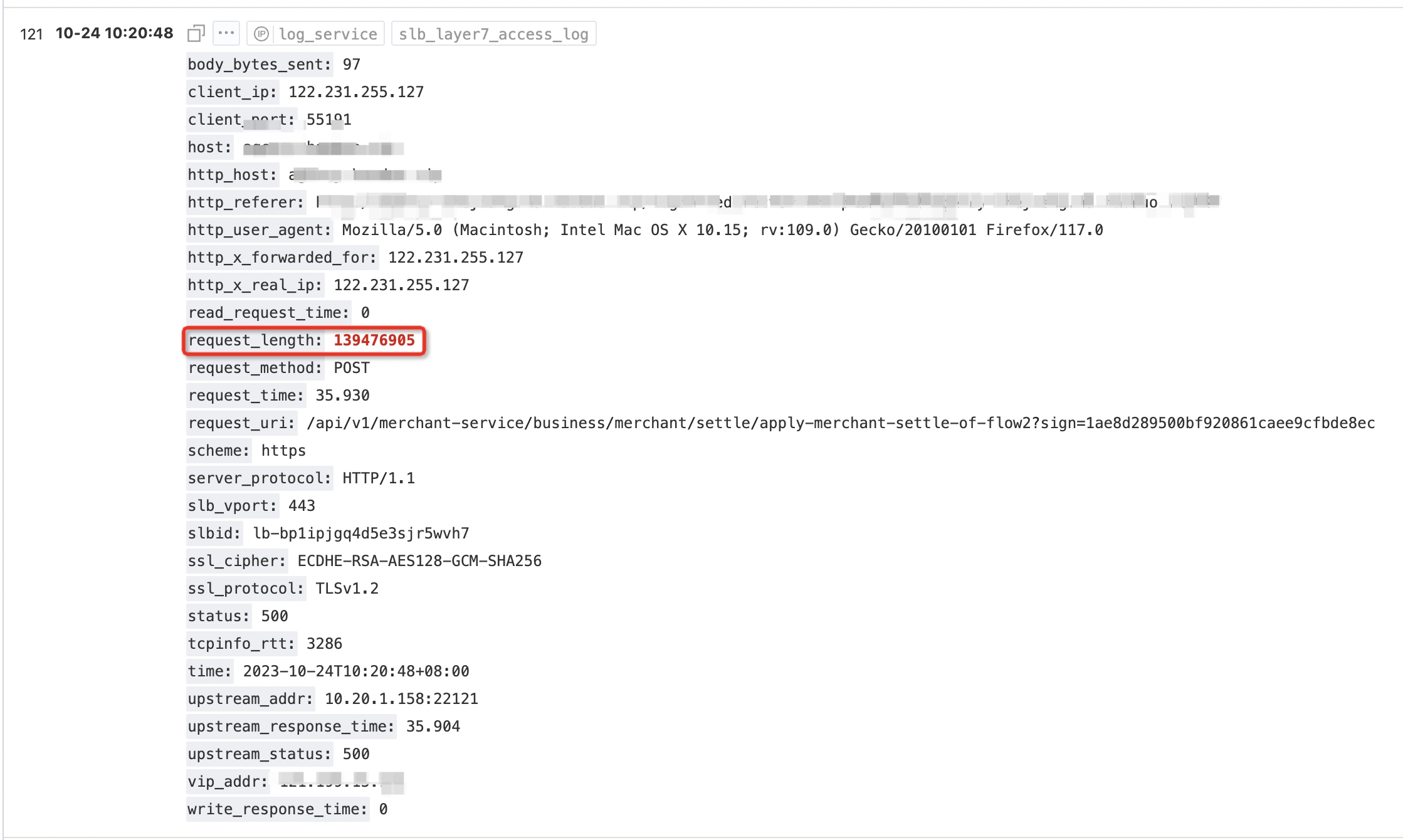

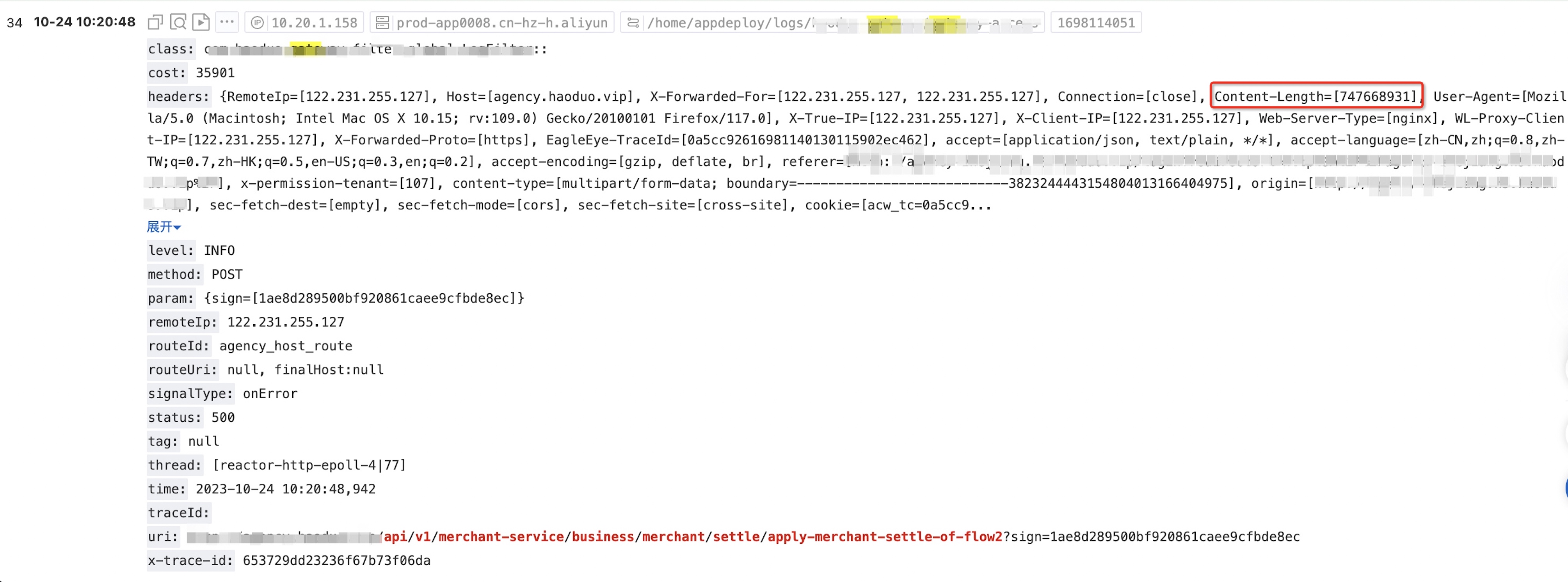

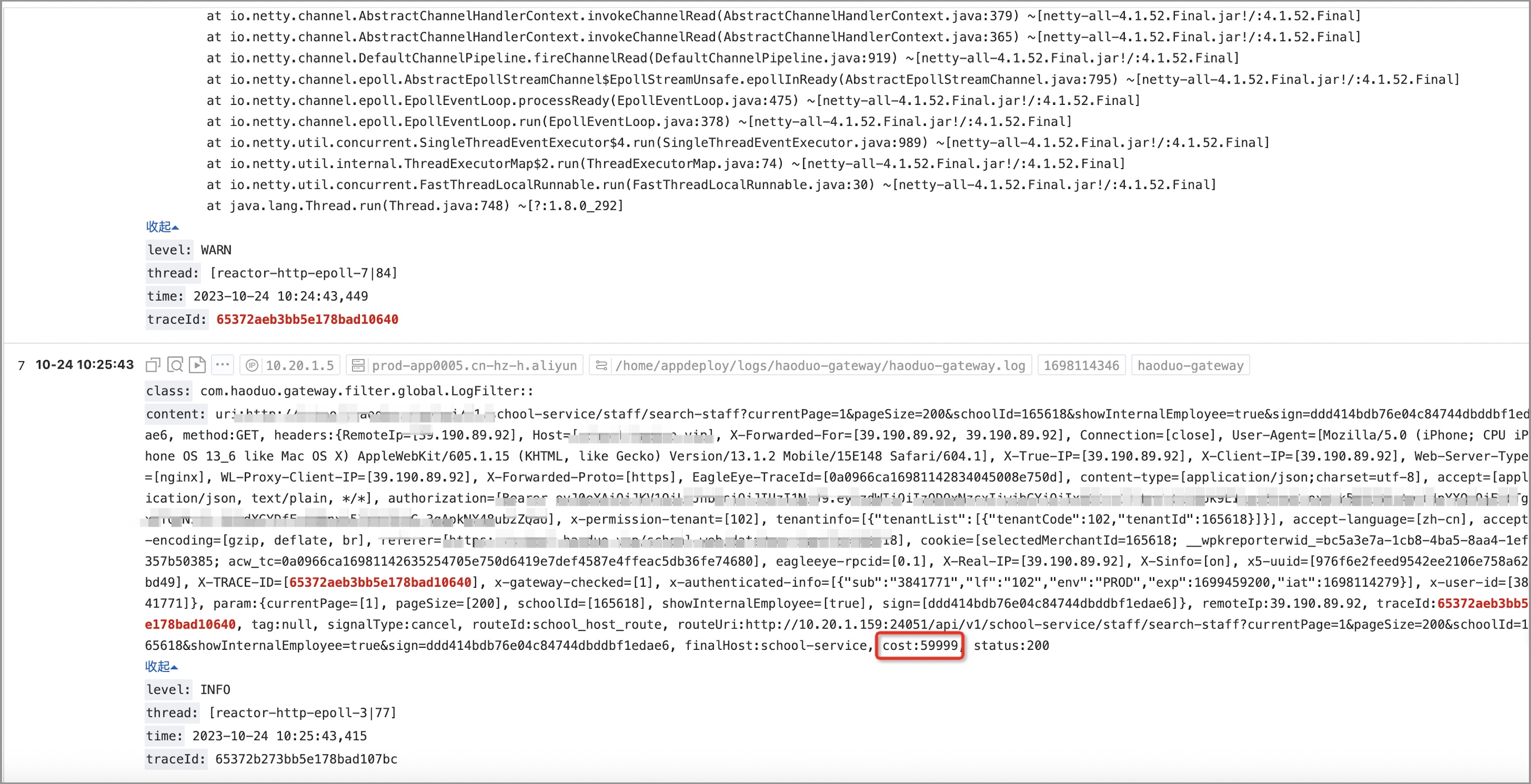

多年的开发经验告诉我,OutOfMemory应该不是小问题,必须赶紧排查。我先找到对应的请求在slb以及spring-cloud-gateway这两层的请求日志:

apply-merchant-settle-of-flow2 这个是机构入驻申请的接口,要求上传图片/视频等信息。这块之前的实现是直接用的web容器来接的文件。从上面2条请求日志可以看出来,肯定是用户上传了一个大文件。不过两边的请求大小相差甚远(slb上记录的是100多M,而请求头里的content-length有700多M)。我怀疑是slb的request_length不是直接取的请求头的content-length,更像是实际读取到的数据量。也就是用户上传了700M+的文件,但是传到100M+的时候,spring-cloud-gateway就因为内存不足报错了。slb官方文档对于request_length字段的解释是:请求报文的长度,包括startline、HTTP header和HTTP Body。

止血

700多M的文件,瞬间让我菊花一紧,这种大文件的上传下载搞不好就能直接摧毁系统。刻不容缓,我可不想在1024被祭天,昨天语雀挂了N个小时已经被各种口诛笔伐了。赶紧赶紧!

先止血,很自然而然就想到了限制请求大小,spring-cloud-gateway也提供了一个内置的Filter:

org.springframework.cloud.gateway.filter.factory.RequestSizeGatewayFilterFactory。于是满心欢喜的配置上这个Filter,先配了一个较小的限制测试功能,发现response code确实变成了413——request too large。但是为了严谨性,又试了一个较大的文件,竟然报了和线上一样的异常。此时我不禁怀疑起了这个Filter的实现:难道不是通过content-length来判断的?

不过看了上面贴出的源代码,很快就否定了上述“愚蠢的”猜想。于是又有一个新的猜想在我的脑海中浮现:是不是有会去读取body数据的Filter并且在

RequestSizeFilter之前执行?我试着把

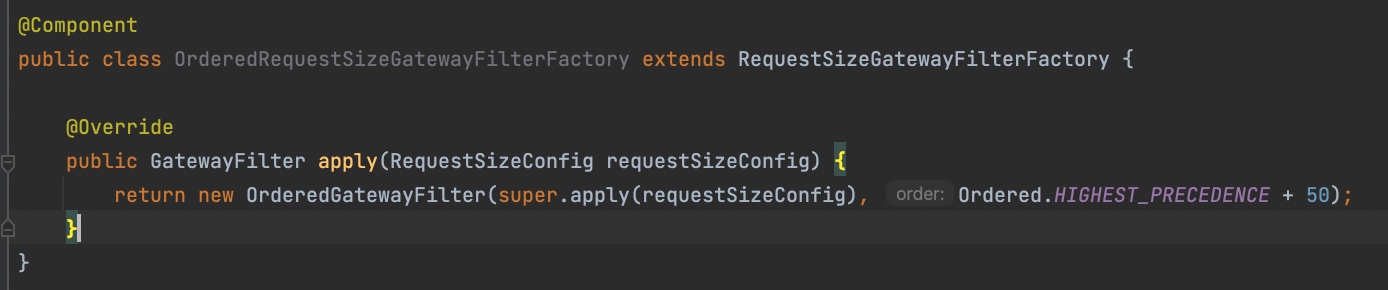

RequestSizeGatewayFilterFactory的优先级调高

可以通过

OrderedGatewayFilter来指定了它的order,因为spring-cloud-gateway里它的GlobalFilter和GatewayFilter是并列通过order来排序的。所以通过这样的方式我们就把RequestSizeGatewayFilter的优先级提高了。改完之后,发现请求拦截得很丝滑,没有之前的问题了。

定位

下面就是要定位原因了:到底是前面的哪个Filter或者是哪些逻辑导致了内存溢出呢?

首先想到我们有一个自定义的Filter:

RequestBodyAddCacheGlobalFilter。它会读取并缓存整个body以便于后续的Filter拿body做签名校验。不过针对于上传文件的场景之前就做了特殊处理:

那应该不是这个Filter的锅,那么怎么定位到有问题的Filter呢?我尝试使用arthas来定位

很遗憾,没有看到耗时很长的Filter。不过有一个奇怪的现象是,FilterChain上总共有26个Filter,如果是上传大文件报错的场景下,执行日志输出到

AdaptCachedBodyGlobalFilter就结束了。而如果是正常的场景,所有Filter的执行日志都能正常输出。于是我把重点锁定到了AdaptCachedBodyGlobalFilter另外,我们通过打开

Hooks.onOperatorDebug();,在异常链路里也能定位到这个类AdaptCachedBodyGlobalFilter

AdaptCachedBodyGlobalFilter是spring-cloud-gateway设计用来缓存body的,因为http请求的body只能读一次,这在某些场景下就不适用了,比如gateway的重试机制。这个时候就需要先把body缓存下来,后续每次读取都从缓存中读。所以其实只有在启用了RetryGatewayFilter的route下,AdaptCachedBodyGlobalFilter里缓存body的逻辑才会生效

那么是不是把对应route里的

RetryGatewayFilter给去掉就能解决这个问题?我们尝试了一下,去掉之后确实没有内存溢出的异常,符合我们的预期。但是这个Filter里具体的耗时是在哪一步呢?因为用arthas定位出来的耗时很短,估计这个应该是跟reactor的异步非阻塞的机制有关系

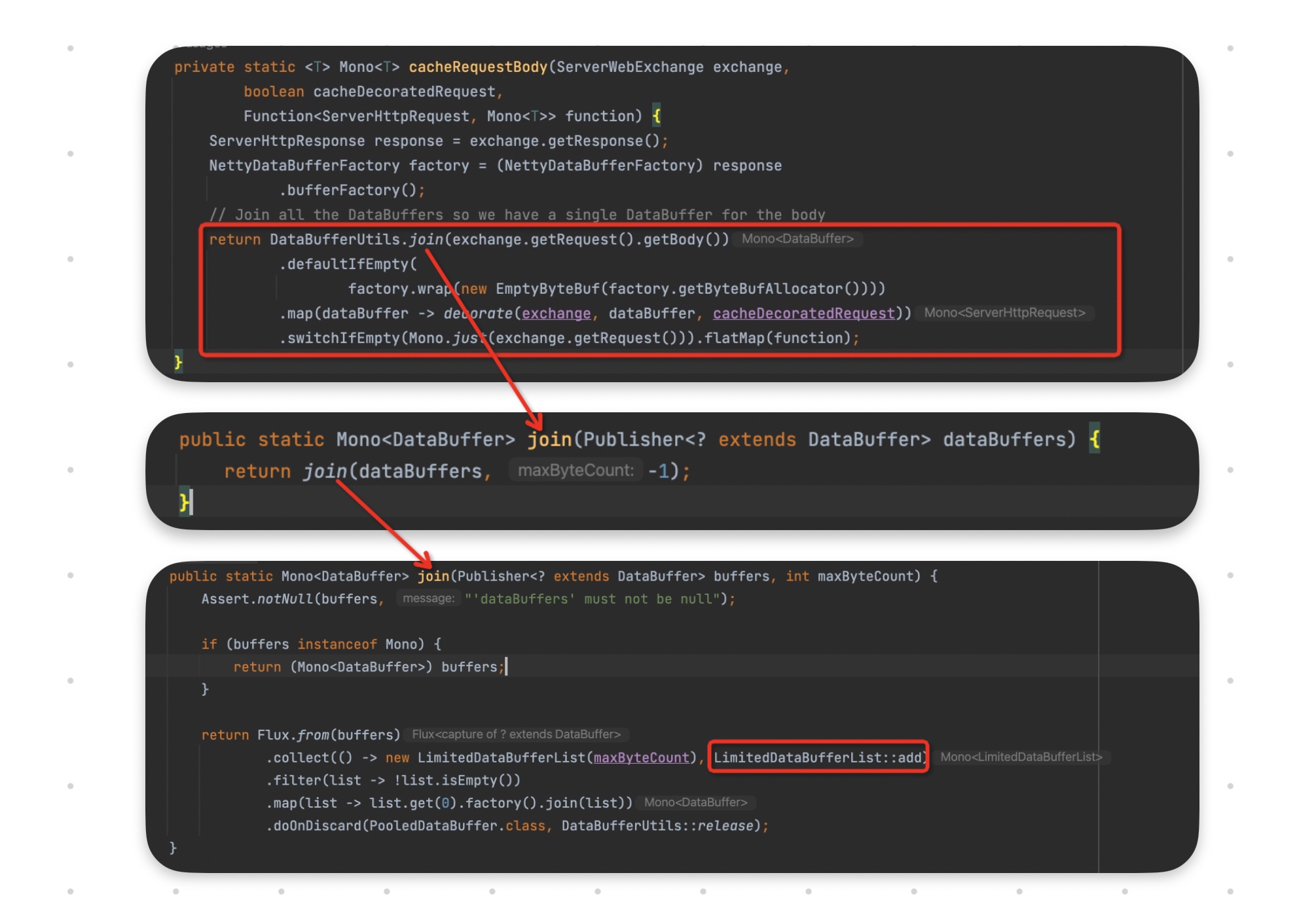

我们进一步去看看代码,定位到缓存body的逻辑

截图中框出来的这一段应该是读取整个body的buffer并聚合成一个大的DataBuffer,作为缓存。我们用arthas的monitor命令来看看它们的执行情况

看到这么多的执行次数,感觉终于要定位到耗时代码了。应该是因为请求体太大,然后每次能读的数据量有限,所以需要读取N多次。并且这里的执行速度很大程度上取决于客户端的上传速度,数据准备好之后,epoll线程才能介入读取数据。这也解释了为什么我在本地跑的时候,不管抛不抛异常,响应速度都很快。

到这里,其实我们已经搞清楚了

- 启用

RetryGatewayFilter会间接启用AdaptCachedBodyGlobalFilter缓存body的逻辑,body过大可能会导致内存(默认使用的是DirectBuffer)溢出(这块内存分配的机制暂时不是很了解,但是看起来应该和文件大小并非完全对应,因为按照slb的日志来看应该才读取了100多M)。

- 整个请求的耗时很大程度取决于客户端的上传速度,但是得益于reactor这种异步非阻塞IO,线程不会阻塞,epoll线程可以穿插处理其他请求

另外一种异常场景

这已经足以解释本文开头的问题了。不过我们后来仔细看了一下,其实还存在着另外一类异常:它的异常信息里并没有请求接口相关的

checkpoint,并且对应的接口里也没有上传文件的逻辑。而是发生在spring-cloud-gateway请求下游数据响应回来之后,spring-cloud-gateway去读取响应数据的时候内存溢出了。我们根据这个异常上的traceId也找到了对应的请求日志

整个请求在spring-cloud-gateway花费了接近60s,感觉应该是报了内存溢出的异常之后,整个请求流程就中断了,后面可能是因为slb有超时时间的配置(60s),才触发的spring-cloud-gateway的响应。(因为找了好几个这样的请求,都是60s结束)

另外,补充说明一下,spring-cloud-gateway对于下游的请求响应一般应该也不会读取并保存,而是直接输出到response,而我们的场景里可能会改写response所以才导致的会读取响应结果。

内存溢出?

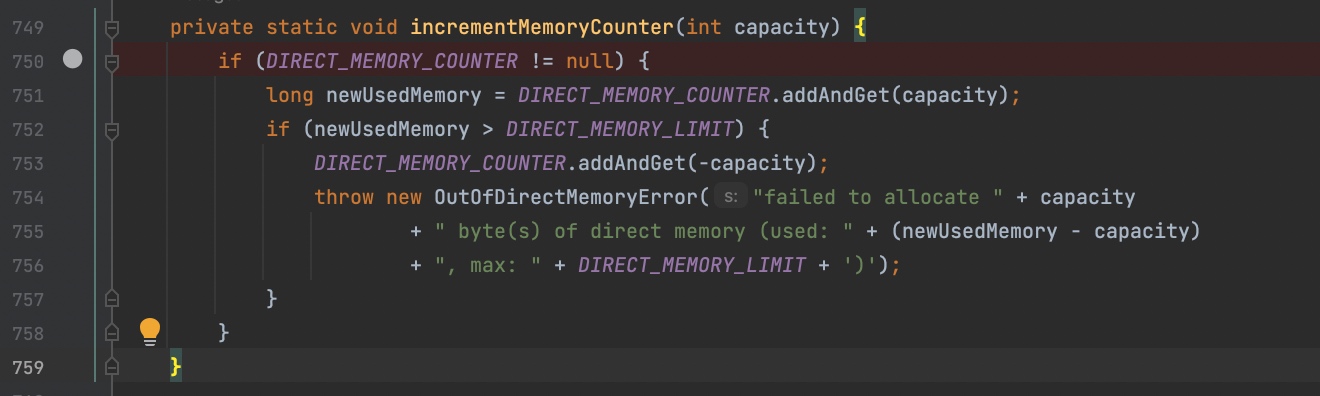

这个问题的出现,让我不禁怀疑,难道前面上传文件占用的内存没有释放吗?通过前面的异常栈发现确实应该是没有释放:

我们来看看这一段异常堆栈对应的源代码

监控堆外内存的使用情况

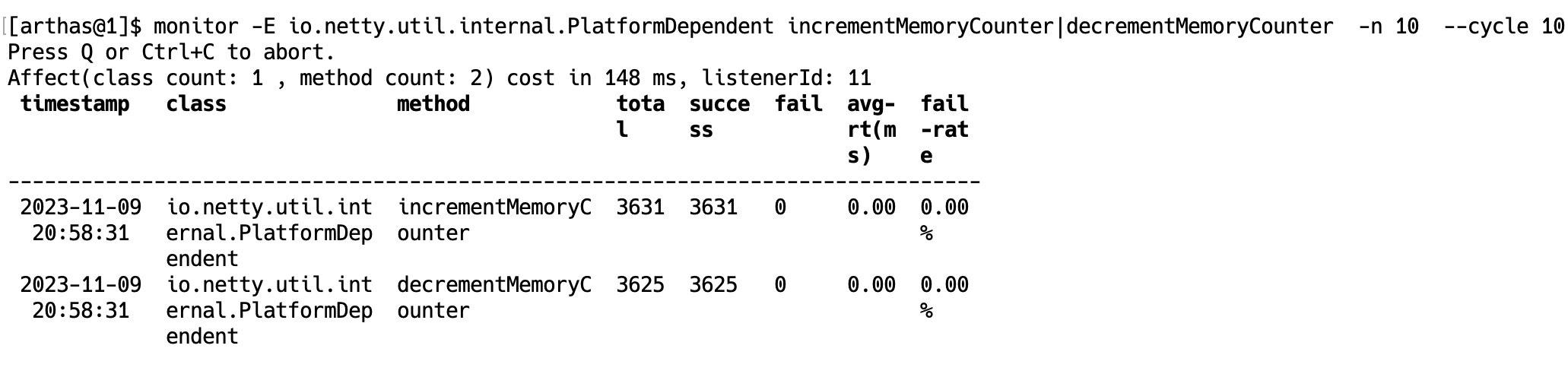

netty内部维护了一个计数器用来记录当前分配的堆外内存,一旦超过限制就会报错。那么我们是不是可以监控这个值,看看它是怎么变化的

我们先在默认的池化分配方式下做监控,在这种模式下我们发现这个内存其实是没有释放的,监控方法调用也没有发现有调用

io.netty.util.internal.PlatformDependent#decrementMemoryCounter这个方法。最终的结果就是这个数值越来越大非池化内存分配

我们又试了unpooled方式分配内存

-Dio.netty.allocator.type=unpooled

可以看到这次既有increment也有decrement的调用,次数也是差不多的

虽然期间也报了同样的内存溢出的错误(这个应该是没法避免的),但是请求结束就全部回收掉了。这个问题我看github上也有人提相关问题,但是暂时也没给解决。大家使用的workaround都是使用unpooled内存分配方式

Netty Native memory increases continuously when using AdaptCachedBodyGlobalFilter

Updated Mar 22, 2024

包括阿里EDAS对于spring-cloud-gateway的增强也提到了

内存泄漏问题,该问题来自于 CSB 的生产实践,Spring Cloud Gateway 底层依赖 netty 进行 IO 通信,熟悉 netty 的人应当知道其有一个读写缓冲的设计,如果通信内容较小,一般会命中 chunked buffer,而通信内容较大时,例如文件上传,则会触发内存的新分配,而 Spring Cloud Gateway 在对接 netty 时存在逻辑缺陷,会导致新分配的池化内存无法完全回收,导致堆外内存泄漏。并且这块堆外内存时 netty 使用 unsafe 自行分配的,通过常规的 JVM 工具还无法观测,非常隐蔽。EDAS 建议为 Spring Cloud Gateway 应用增加启动参数-Dio.netty.allocator.type=unpooled,使得请求未命中 chunked buffer 时,分配的临时内存不进行池化,规避内存泄漏问题。-Dio.netty.allocator.type=unpooled不会导致性能下降,只有大报文才会触发该内存的分配,而网关的最佳实践应该是不允许文件上传这类需求,加上该参数是为了应对非主流场景的一个兜底行为。

对于使用姿势的优化

其实这次的问题原因主要是由于我们在spring-cloud-gateway开启了重试机制,导致每个请求都要缓存请求body,在body过大的场景下可能就会内存溢出。关闭重试机制也不失为一种解决方案,但是一般来说,网关的重试还是有必要的,比如下游服务重启等场景,为了更稳定的服务都需要重试。所以以下对于使用姿势优化的考量都在需要重试的前提下。

那么,既然是body过大的场景下的问题,我们是不是可以针对这种场景做一些特殊处理呢?github上有人给出了2个解决方案:

- 增加可配置阈值限制内存缓存的大小,超过之后就保存到磁盘上

- body过大的请求直接不允许retry

Conditional Caching in ServerWebExchangeUtils.cacheRequestBody

Updated Mar 14, 2024

不过看起来1这种方案,性能堪忧。还是方案2更靠谱一些。不过我觉得还可以更激进一些:我们直接增加了全局请求大小限制的Filter,结合线上请求的大小,当前暂定1M,其实除去上传文件请求,正常场景的body都不会太大。

总结一下,通过这次的问题,我们在spring-cloud-gateway的使用姿势上做了如下优化:

- 增加启动参数

-Dio.netty.allocator.type=unpooled

- 增加全局请求大小限制Filter

- 不允许使用web server直接接收文件上传

关于前面用arthas trace耗时很短的问题

前面解释了是因为reactor异步非阻塞的模式导致的。是的,reactor编程模式下,所有的动作其实是发生在subscribe那一刻的,而前面的只是定义,而不涉及执行。下面给个简单的例子再感受一下

过程中的一些记录

- JVisiualVM/JConsole远程连接监控JVM

- reactor编程模式

- JVM内存使用情况的一些分析

这些内容都花了不少时间去研究,这个看后面能不能单独输出文章

参考

- Native Memory Tracking 详解(1):基础介绍 https://mp.weixin.qq.com/s?__biz=MzkyNTMwMjI2Mw==&mid=2247489204&idx=1&sn=c3a9ac4f291543b3504cd1bc1adf0bdb

- 聊聊HotSpot VM的Native Memory Tracking https://cloud.tencent.com/developer/article/1406522

- 全网最硬核 JVM 内存解析 - 1.从 Native Memory Tracking 说起 https://juejin.cn/post/7225871227743043644

- Spring Boot引起的“堆外内存泄漏”排查及经验总结 https://tech.meituan.com/2019/01/03/spring-boot-native-memory-leak.html

- Spring Cloud Gateway之踩坑日记 https://blog.csdn.net/manzhizhen/article/details/115386684

- 今咱们来聊聊JVM 堆外内存泄露的BUG是如何查找的 https://cloud.tencent.com/developer/article/1129904

- 一次完整的JVM堆外内存泄漏故障排查记录 https://heapdump.cn/article/1821465

- 一次压缩引发堆外内存过高的教训 https://cloud.tencent.com/developer/article/1700083

- jvisualvm远程连接的三种方式 https://blog.csdn.net/BushQiang/article/details/114709682

- EDAS 让 Spring Cloud Gateway 生产可用的二三策 https://www.cnkirito.moe/edas-scg-agent/

- Author:黑微狗

- URL:https://blog.hwgzhu.com/article/spring-cloud-gateway-memory-leak

- Copyright:All articles in this blog, except for special statements, adopt BY-NC-SA agreement. Please indicate the source!